In this post, we'll explore how to provision Cloud SQL instances with different connectivity options using Terraform and then access them from a Spring Boot application deployed on Google Cloud Run. We'll leverage Terraform to create the necessary infrastructure and configure the networking components. Then, we'll build and deploy a Spring Boot application that connects to these Cloud SQL instances using the appropriate methods.

Enabled APIs

The following APIs need to be enabled for this project:

- Cloud SQL API

- Cloud Run API

- Cloud Build API

- Artifact Registry API

- Cloud Logging API

- Serverless VPC Access API

Terraform Project Overview

- A VPC network named nw1-vpc is created, along with two subnets (nw1-vpc-sub1-us-central1 and nw1-vpc-sub3-us-west1) in different regions.

- Public IP Instance: A Cloud SQL instance named main-instance with a public IP address is created, allowing connections from anywhere.

- Private IP (VPC) Instance: A Cloud SQL instance named private-instance with a private IP address is created, accessible only within the VPC network.

- Firewall rules are defined to control access to the VPC network.

- A NAT gateway is configured to allow instances in the VPC network to access the internet.

- A service account named cloudsql-service-account-id is created and granted the necessary roles for accessing Cloud SQL instances.

- A VPC Connector named private-cloud-sql is provisioned for enabling VPC access from Google Cloud services like Cloud Run.

project structure

terraform-project/ ├── main.tf ├── network.tf ├── iam.tf ├── serviceaccount.tf ├── provider.tf ├── variables.tf ├── terraform.tfvars

Provider Configuration

In the provider.tf file, we define the required providers and configure the Google Cloud provider with the project ID, region, and zone:

provider "google" {

project = var.project_id

region = var.region

zone = var.zone

}The project_id, region, and zone variables are defined in the variables.tf file and assigned values in the terraform.tfvars file.

Virtual Private Cloud (VPC) Network and Subnets

In the network.tf file, we create the VPC network and subnets:

resource "google_compute_network" "nw1-vpc" {

project = var.project_id

name = "nw1-vpc"

auto_create_subnetworks = false

mtu = 1460

}

resource "google_compute_subnetwork" "nw1-subnet1" {

name = "nw1-vpc-sub1-${var.region}"

network = google_compute_network.nw1-vpc.id

ip_cidr_range = "10.10.1.0/24"

region = var.region

private_ip_google_access = true

}

resource "google_compute_subnetwork" "nw1-subnet2" {

name = "nw1-vpc-sub3-us-west1"

network = google_compute_network.nw1-vpc.id

ip_cidr_range = "10.10.2.0/24"

region = var.sec_region

private_ip_google_access = true

}Cloud SQL Instances

In the main.tf file, we create the Cloud SQL instances with different connectivity options:

- Public IP Instance

resource "google_sql_database_instance" "my_public_instance" {

project = var.project_id

name = "main-instance"

database_version = "POSTGRES_15"

region = var.region

deletion_protection = false

settings {

tier = "db-f1-micro"

}

}- Private IP (VPC) Instance

resource "google_sql_database_instance" "my_private_instance" {

depends_on = [google_service_networking_connection.private_vpc_connection]

project = var.project_id

name = "private-instance"

region = var.region

database_version = "POSTGRES_15"

deletion_protection = false

settings {

tier = "db-f1-micro"

ip_configuration {

ipv4_enabled = false

private_network = google_compute_network.nw1-vpc.self_link

enable_private_path_for_google_cloud_services = true

}

}

}In the network.tf file, we configure various networking components:

- Firewall Rules

resource "google_compute_firewall" "nw1-ssh-icmp-allow" {

name = "nw1-vpc-ssh-allow"

network = google_compute_network.nw1-vpc.id

allow {

protocol = "icmp"

}

allow {

protocol = "tcp"

ports = ["22"]

}

source_ranges = ["39.33.11.48/32"]

target_tags = ["nw1-vpc-ssh-allow"]

priority = 1000

}

resource "google_compute_firewall" "nw1-internal-allow" {

name = "nw1-vpc-internal-allow"

network = google_compute_network.nw1-vpc.id

allow {

protocol = "icmp"

}

allow {

protocol = "udp"

ports = ["0-65535"]

}

allow {

protocol = "tcp"

ports = ["0-65535"]

}

source_ranges = ["10.10.0.0/16"]

priority = 1100

}

resource "google_compute_firewall" "nw1-iap-allow" {

name = "nw1-vpc-iap-allow"

network = google_compute_network.nw1-vpc.id

allow {

protocol = "icmp"

}

allow {

protocol = "tcp"

ports = ["0-65535"]

}

source_ranges = ["35.235.240.0/20"]

priority = 1200

}- NAT Gateway

resource "google_compute_address" "natpip" {

name = "ipv4-address"

region = var.sec_region

}

resource "google_compute_router" "router1" {

name = "nat-router1"

region = var.sec_region

network = google_compute_network.nw1-vpc.id

bgp {

asn = 64514

}

}

resource "google_compute_router_nat" "nat1" {

name = "natgw1"

router = google_compute_router.router1.name

region = var.sec_region

nat_ip_allocate_option = "MANUAL_ONLY"

nat_ips = [google_compute_address.natpip.self_link]

source_subnetwork_ip_ranges_to_nat = "ALL_SUBNETWORKS_ALL_IP_RANGES"

min_ports_per_vm = 256

max_ports_per_vm = 512

log_config {

enable = true

filter = "ERRORS_ONLY"

}

}- VPC Peering

resource "google_compute_global_address" "private_ip_address" {

name = google_compute_network.nw1-vpc.name

purpose = "VPC_PEERING"

address_type = "INTERNAL"

prefix_length = 16

network = google_compute_network.nw1-vpc.name

}

resource "google_service_networking_connection" "private_vpc_connection" {

network = google_compute_network.nw1-vpc.id

service = "servicenetworking.googleapis.com"

reserved_peering_ranges = [google_compute_global_address.private_ip_address.name]

}resource "google_service_account" "cloudsql_service_account" {

project = var.project_id

account_id = "cloudsql-service-account-id"

display_name = "Service Account for Cloud SQL"

}

resource "google_project_iam_member" "member-role" {

depends_on = [google_service_account.cloudsql_service_account]

for_each = toset([

"roles/cloudsql.client",

"roles/cloudsql.editor",

"roles/cloudsql.admin",

"roles/resourcemanager.projectIamAdmin",

"roles/vpcaccess.serviceAgent"

])

role = each.key

project = var.project_id

member = "serviceAccount:${google_service_account.cloudsql_service_account.email}"

}resource "google_vpc_access_connector" "private-cloud-sql" {

project = var.project_id

name = "private-cloud-sql"

region = var.region

network = google_compute_network.nw1-vpc.id

machine_type = "e2-micro"

ip_cidr_range = "10.10.3.0/28"

}Spring Boot Application

The Spring Boot application is designed to connect to the Cloud SQL instances provisioned by Terraform. Here are the key components:

Data Sources:

- Multiple data sources are configured in the application.yaml file, one for each Cloud SQL instance (public IP and private IP).

Database Configurations:

- Separate configuration classes (PublicIPAddresspostgresConfig and PrivateIPAddressVPCpostgresConfig) are defined to set up the database connections using the Cloud SQL Postgres Socket Factory.

Entity Models and Repositories:

- Entity models (Table1 and Table2) and corresponding repositories are created for interacting with the databases.

Services and Controllers:

- Services (Table1Service and Table2Service) and controllers (Table1Controller and Table2Controller) are implemented to handle database operations and expose REST APIs.

Dockerization:

- The Spring Boot application is dockerized using a Dockerfile, allowing it to be packaged and deployed as a container image.

project structure

demo-cloudsql/ ├── src/ │ ├── main/ │ │ ├── java/ │ │ │ └── com/ │ │ │ └── henry/ │ │ │ └── democloudsql/ │ │ │ ├── configuration/ │ │ │ │ ├── PrivateIPAddressVPCpostgresConfig.java │ │ │ │ ├── PublicIPAddresspostgresConfig.java │ │ │ ├── controller/ │ │ │ │ ├── HelloWorldController.java │ │ │ │ ├── Table1Controller.java │ │ │ │ ├── Table2Controller.java │ │ │ │ │ │ │ ├── model/ │ │ │ │ ├── privateipvpc/ │ │ │ │ │ └── Table2.java │ │ │ │ ├── publicip/ │ │ │ │ └── Table1.java │ │ │ ├── repository/ │ │ │ │ ├── privateipvpc/ │ │ │ │ │ └── Table2Repository.java │ │ │ │ ├── publicip/ │ │ │ │ └── Table1Repository.java │ │ │ ├── service/ │ │ │ │ ├── impl/ │ │ │ │ │ ├── Table1ServiceImpl.java │ │ │ │ │ ├── Table2ServiceImpl.java │ │ │ │ │ └ │ │ │ │ ├── Table1Service.java │ │ │ │ ├── Table2Service.java │ │ │ │ │ │ │ └── DemoCloudSqlApplication.java │ │ └── resources/ │ │ ├── application.yaml │ │ ├── data.sql │ │ └── schema.sql │ └── test/ │ └── java/ │ └── com/ │ └── henry/ │ └── democloudsql/ │ └── DemoCloudSqlApplicationTests.java ├── Dockerfile ├── pom.xml

Data Sources Configuration

In the application.yaml file, we configure the data sources for the different Cloud SQL instances:

spring:

datasource:

public-ip:

url: jdbc:postgresql:///

database: my-database1

cloudSqlInstance: YOUR_PROJECT_ID:us-central1:main-instance

username: henry

password: mypassword

ipTypes: PUBLIC

socketFactory: com.google.cloud.sql.postgres.SocketFactory

driverClassName: org.postgresql.Driver

private-ip:

url: jdbc:postgresql:///

database: my-database2

cloudSqlInstance: YOUR_PROJECT_ID:us-central1:private-instance

username: henry

password: mypassword

ipTypes: PRIVATE

socketFactory: com.google.cloud.sql.postgres.SocketFactory

driverClassName: org.postgresql.Driver

Database Configuration Classes

- PublicIPAddresspostgresConfig.java

package com.henry.democloudsql.configuration;

import com.zaxxer.hikari.HikariConfig;

import com.zaxxer.hikari.HikariDataSource;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.context.annotation.Primary;

import org.springframework.data.jpa.repository.config.EnableJpaRepositories;

import org.springframework.orm.jpa.JpaTransactionManager;

import org.springframework.orm.jpa.JpaVendorAdapter;

import org.springframework.orm.jpa.LocalContainerEntityManagerFactoryBean;

import org.springframework.orm.jpa.vendor.HibernateJpaVendorAdapter;

import org.springframework.transaction.PlatformTransactionManager;

import javax.naming.NamingException;

import javax.sql.DataSource;

import java.util.Properties;

@Configuration

@EnableJpaRepositories(

basePackages = "com.henry.democloudsql.repository.publicip",

entityManagerFactoryRef = "mySchemaPublicIPEntityManager",

transactionManagerRef = "mySchemaPublicIPTransactionManager"

)

public class PublicIPAddresspostgresConfig {

@Value("${spring.datasource.public-ip.url}")

private String url;

@Value("${spring.datasource.public-ip.database}")

private String database;

@Value("${spring.datasource.public-ip.cloudSqlInstance}")

private String cloudSqlInstance;

@Value("${spring.datasource.public-ip.username}")

private String username;

@Value("${spring.datasource.public-ip.password}")

private String password;

@Value("${spring.datasource.public-ip.ipTypes}")

private String ipTypes;

@Value("${spring.datasource.public-ip.socketFactory}")

private String socketFactory;

@Value("${spring.datasource.public-ip.driverClassName}")

private String driverClassName;

@Bean

@Primary

public LocalContainerEntityManagerFactoryBean mySchemaPublicIPEntityManager()

throws NamingException {

LocalContainerEntityManagerFactoryBean em

= new LocalContainerEntityManagerFactoryBean();

em.setDataSource(mySchemaPublicIPDataSource());

em.setPackagesToScan("com.henry.democloudsql.model.publicip");

JpaVendorAdapter vendorAdapter = new HibernateJpaVendorAdapter();

em.setJpaVendorAdapter(vendorAdapter);

em.setJpaProperties(mySchemaPublicIPHibernateProperties());

return em;

}

@Bean

@Primary

public DataSource mySchemaPublicIPDataSource() throws IllegalArgumentException {

HikariConfig config = new HikariConfig();

config.setJdbcUrl(String.format(url + "%s", database));

config.setUsername(username);

config.setPassword(password);

config.addDataSourceProperty("socketFactory", socketFactory);

config.addDataSourceProperty("cloudSqlInstance", cloudSqlInstance);

config.addDataSourceProperty("ipTypes", ipTypes);

config.setMaximumPoolSize(5);

config.setMinimumIdle(5);

config.setConnectionTimeout(10000);

config.setIdleTimeout(600000);

config.setMaxLifetime(1800000);

return new HikariDataSource(config);

}

private Properties mySchemaPublicIPHibernateProperties() {

Properties properties = new Properties();

return properties;

}

@Primary

@Bean

public PlatformTransactionManager mySchemaPublicIPTransactionManager() throws NamingException {

final JpaTransactionManager transactionManager = new JpaTransactionManager();

transactionManager.setEntityManagerFactory(mySchemaPublicIPEntityManager().getObject());

return transactionManager;

}

}

- PrivateIPAddressVPCpostgresConfig.java

package com.henry.democloudsql.configuration;

import com.zaxxer.hikari.HikariConfig;

import com.zaxxer.hikari.HikariDataSource;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.data.jpa.repository.config.EnableJpaRepositories;

import org.springframework.orm.jpa.JpaTransactionManager;

import org.springframework.orm.jpa.JpaVendorAdapter;

import org.springframework.orm.jpa.LocalContainerEntityManagerFactoryBean;

import org.springframework.orm.jpa.vendor.HibernateJpaVendorAdapter;

import org.springframework.transaction.PlatformTransactionManager;

import javax.naming.NamingException;

import javax.sql.DataSource;

import java.util.Properties;

@Configuration

@EnableJpaRepositories(

basePackages = "com.henry.democloudsql.repository.privateipvpc",

entityManagerFactoryRef = "mySchemaVpcEntityManager",

transactionManagerRef = "mySchemaVpcTransactionManager"

)

public class PrivateIPAddressVPCpostgresConfig {

@Value("${spring.datasource.private-ip.url}")

private String url;

@Value("${spring.datasource.private-ip.database}")

private String database;

@Value("${spring.datasource.private-ip.cloudSqlInstance}")

private String cloudSqlInstance;

@Value("${spring.datasource.private-ip.username}")

private String username;

@Value("${spring.datasource.private-ip.password}")

private String password;

@Value("${spring.datasource.private-ip.ipTypes}")

private String ipTypes;

@Value("${spring.datasource.private-ip.socketFactory}")

private String socketFactory;

@Value("${spring.datasource.private-ip.driverClassName}")

private String driverClassName;

@Bean

public LocalContainerEntityManagerFactoryBean mySchemaVpcEntityManager() {

LocalContainerEntityManagerFactoryBean em

= new LocalContainerEntityManagerFactoryBean();

em.setDataSource(mySchemaVpcDataSource());

em.setPackagesToScan("com.henry.democloudsql.model.privateipvpc");

JpaVendorAdapter vendorAdapter = new HibernateJpaVendorAdapter();

em.setJpaVendorAdapter(vendorAdapter);

em.setJpaProperties(mySchemaVpcHibernateProperties());

return em;

}

@Bean

public DataSource mySchemaVpcDataSource() throws IllegalArgumentException {

HikariConfig config = new HikariConfig();

config.setJdbcUrl(String.format(url + "%s", database));

config.setUsername(username);

config.setPassword(password);

config.addDataSourceProperty("socketFactory", socketFactory);

config.addDataSourceProperty("cloudSqlInstance", cloudSqlInstance);

config.addDataSourceProperty("ipTypes", ipTypes);

config.setMaximumPoolSize(5);

config.setMinimumIdle(5);

config.setConnectionTimeout(10000);

config.setIdleTimeout(600000);

config.setMaxLifetime(1800000);

return new HikariDataSource(config);

}

private Properties mySchemaVpcHibernateProperties() {

Properties properties = new Properties();

return properties;

}

@Bean

public PlatformTransactionManager mySchemaVpcTransactionManager() throws NamingException {

final JpaTransactionManager transactionManager = new JpaTransactionManager();

transactionManager.setEntityManagerFactory(mySchemaVpcEntityManager().getObject());

return transactionManager;

}

}package com.henry.democloudsql.model.publicip;

import jakarta.persistence.*;

import lombok.*;

@Entity

@Getter

@Setter

@NoArgsConstructor

@AllArgsConstructor

@Builder

@Table(name = "table1")

public class Table1 {

@Id

@GeneratedValue(strategy = GenerationType.IDENTITY)

private Long id;

@Column(name = "name")

private String name;

@Column(name = "age")

private Integer age;

}

package com.henry.democloudsql.model.privateipvpc;

import jakarta.persistence.*;

import lombok.*;

@Entity

@Getter

@Setter

@NoArgsConstructor

@AllArgsConstructor

@Builder

@Table(name = "table2")

public class Table2 {

@Id

@GeneratedValue(strategy = GenerationType.IDENTITY)

private Long id;

@Column(name = "city")

private String city;

@Column(name = "country")

private String country;

}Repositories

public interface Table1Repository extends CrudRepository<Table1, Long> {

}public interface Table2Repository extends CrudRepository<Table2, Long> {

}Services

public interface Table1Service {

Table1 save(Table1 obj);

Iterable<Table1> findAll();

Table1 findById(Long id);

}

@Service

public final class Table1ServiceImpl implements Table1Service {

private final Table1Repository table1Repository;

public Table1ServiceImpl(Table1Repository table1Repository) {

this.table1Repository = table1Repository;

}

@Override

public Table1 save(Table1 obj) {

return null;

}

@Override

public Iterable<Table1> findAll() {

return table1Repository.findAll();

}

@Override

public Table1 findById(Long id) {

return null;

}

}

package com.henry.democloudsql.service;

import com.henry.democloudsql.model.privateipvpc.Table2;

public interface Table2Service {

Table2 save(Table2 obj);

Iterable<Table2> findAll();

Table2 findById(Long id);

}

package com.henry.democloudsql.service.impl;

import com.henry.democloudsql.model.privateipvpc.Table2;

import com.henry.democloudsql.repository.privateipvpc.Table2Repository;

import com.henry.democloudsql.service.Table2Service;

import org.springframework.stereotype.Service;

@Service

public class Table2ServiceImpl implements Table2Service {

private final Table2Repository table2Repository;

public Table2ServiceImpl(Table2Repository table2Repository) {

this.table2Repository = table2Repository;

}

@Override

public Table2 save(Table2 obj) {

return null;

}

@Override

public Iterable<Table2> findAll() {

return table2Repository.findAll();

}

@Override

public Table2 findById(Long id) {

return null;

}

}

package com.henry.democloudsql.controller;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

import java.util.HashMap;

import java.util.Map;

@RestController

public class HelloWorldController {

@GetMapping

public Map<String,String> helloWorld(){

Map<String,String> map = new HashMap<>();

map.put("msg", "Hello Public IP Address and Private IP Address (VPC) ");

return map;

}

}

package com.henry.democloudsql.controller;

import com.henry.democloudsql.model.publicip.Table1;

import com.henry.democloudsql.service.Table1Service;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

@RequestMapping("/api/v1")

public class Table1Controller {

private final Table1Service table1Service;

public Table1Controller(Table1Service table1Service) {

this.table1Service = table1Service;

}

@GetMapping

public Iterable<Table1> findAll(){

return table1Service.findAll();

}

}

package com.henry.democloudsql.controller;

import com.henry.democloudsql.model.privateipvpc.Table2;

import com.henry.democloudsql.service.Table2Service;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

@RequestMapping("/api/v2")

public class Table2Controller {

private final Table2Service table2Service;

public Table2Controller(Table2Service table2Service) {

this.table2Service = table2Service;

}

@GetMapping

public Iterable<Table2> findAll(){

return table2Service.findAll();

}

}

Dockerization

The Dockerfile is used to build the Docker image for the Spring Boot application:

FROM openjdk:17-jdk-slim WORKDIR /app COPY target/demo-cloudsql.jar app.jar ENTRYPOINT ["java", "-jar", "app.jar"]

This Dockerfile uses the openjdk:17-jdk-slim base image, sets the working directory to /app, copies the built Spring Boot JAR file (demo-cloudsql.jar) into the container, and specifies the entrypoint to run the JAR file.

Additional Details

- Executing Terraform Commands:

Before deploying the Spring Boot application, run the following Terraform commands to provision the infrastructure:

terraform init terraform validate terraform apply -auto-approve

- Building and Deploying Spring Boot Application:

After modifying MY_PROJECT_ID in application.yml on Spring Boot App, run:

mvn clean install

After clean install, you can build the Docker image locally using the following command:

docker build -t quickstart-springboot:1.0.1 .

This command builds the Docker image with the tag quickstart-springboot:1.0.1 using the Dockerfile in the current directory.

Deployment and Integration

- Create an Artifact Repository

gcloud artifacts repositories create my-repo --location us-central1 --repository-format docker

This command creates a Docker repository named my-repo in the us-central1 region.

- Push Docker Image to Artifact Registry

docker tag quickstart-springboot:1.0.1 us-central1-docker.pkg.dev/MY_PROJECT_ID/my-repo/quickstart-springboot:1.0.1

Replace MY_PROJECT_ID with your actual GCP project ID.

Then, push the tagged image to the Artifact Registry:

docker push us-central1-docker.pkg.dev/MY_PROJECT_ID/my-repo/quickstart-springboot:1.0.1

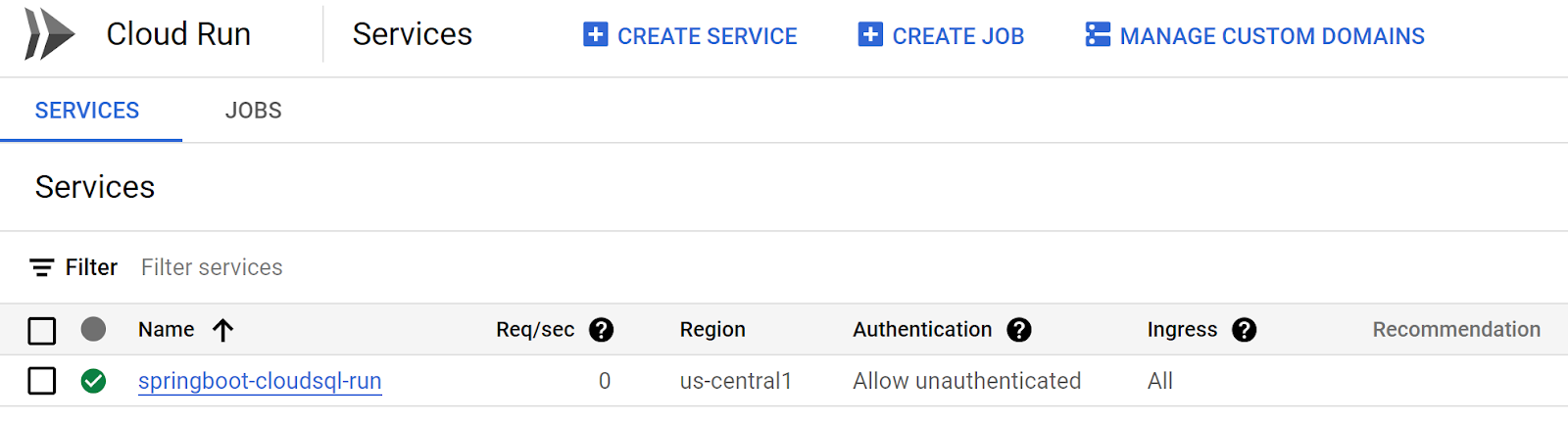

Deploy to Cloud Run

gcloud run deploy springboot-cloudsql-run \ --image us-central1-docker.pkg.dev/<PROJECT_ID>/my-repo/quickstart-springboot:1.0.1 \ --region=us-central1 \ --allow-unauthenticated \ --service-account=cloudsql-service-account-id@<PROJECT-ID>.iam.gserviceaccount.com \ --vpc-connector private-cloud-sql

Replace MY_PROJECT_ID with your actual GCP project ID, and springboot-cloudsql-run with the desired name for your Cloud Run service.

This command deploys the Spring Boot application to Cloud Run, using the Docker image from the Artifact Registry. It also specifies the service account created by Terraform (cloudsql-service-account-id@<PROJECT-ID>.iam.gserviceaccount.com) and the VPC Connector (private-cloud-sql) for access.

After executing this command, your Spring Boot application should be deployed and accessible on Cloud Run, with the ability to connect to the provisioned Cloud SQL instances using the appropriate connectivity methods (public IP and private IP).

No comments:

Post a Comment